To append to a file means to add new content to the end of an existing file, rather than overwriting the file’s existing content. When you append to a file, the new content is added to the end of the file, and the file’s original content is not modified or deleted. This can be useful when you want to add additional information to a file without losing the existing data. In Bash, there are several ways to append data to the end of a file. Here are two common methods that you can use: Method 1: Using the “echo” command…

Author: Rahul

Getting the current date and time is a common task in Python programming. There are several ways to do this, depending on your needs and the libraries you have available. In this article, we will explore some of the most common methods for getting the current date and time in Python, including using the built-in datetime module, the time module, and the dateutil module. We will also discuss how to format the date and time values as strings, and how to convert between timezones. Whether you are working with timestamps, scheduling tasks, or just want to display the current date…

MongoDB is a popular NoSQL database that is widely used for storing and manipulating large amounts of data. It is known for its flexibility, scalability, and performance, making it a great choice for a variety of applications. In this article, we will look at how to connect to a MongoDB database in Python using the `pymongo` library, which is a MongoDB driver for Python. We will also cover some basic operations such as inserting data, querying the database, and updating documents. Prerequisites Before we begin, you will need to install the following: Python 3: You can download and install Python…

Google Chrome is a popular web browser that is widely used for accessing the internet. It is known for its fast performance, security features, and support for a wide range of web technologies. If you want to use Google Chrome on your Ubuntu or Debian system, you can install it using the steps described in this article. There are two methods for installing Google Chrome on Ubuntu and Debian: using the official Google Chrome repository and downloading the Debian package from the Google Chrome website. In this article, we will cover both methods. Method 1: Downloading the Debian Package from…

In computer networking, the term “localhost” refers to the current device used to access it. It is used to access the network services that are running on the host via the loopback network interface. Using the loopback interface bypasses any local network interface hardware. The term “localhost” is derived from the combination of the words “local” and “host”. The word “local” refers to the device itself, while the word “host” refers to the device hosting a service or application. Therefore, “localhost” literally means “the local host.” In most cases, localhost is used to access the network services that are running…

OpenCV (Open Source Computer Vision) is a free and open-source library of computer vision and machine learning algorithms that can be used to process and analyze images and video. It is widely used in a variety of applications, including object detection, image, and video processing, and augmented reality. In this article, we will cover two different methods for installing OpenCV in Python: using “PIP” (the Python Package Manager) and using “Anaconda” (a free and open-source distribution of Python and R for data science and machine learning). Method 1: Installing OpenCV using PIP PIP is the default package manager for Python.…

Deleting the last few commits from a Git repository is a relatively simple process that can be accomplished with a few simple commands. In this guide, we will explain how to delete the last few commits from a git repository, as well as discuss why this might be necessary. We will also provide step-by-step instructions for removing the last few commits from a repository, as well as common issues that may arise in the process. By the end of this guide, you should have a good understanding of how to delete the last few commits from a Git repository. Delete…

In Bash, you can store the standard error output of a command to a variable by using the `2>&1` operator and the `$()` command substitution syntax. Here `2>` redirects the error message to &1`, that represent to standard output. In the case of bash shell works as the standard output device. For example, to store the standard error output of the `ls` command to a variable named errors, you can use the following command: errors=$(ls non-existent-file 2>&1) Alternatively, you can use the `$?` special parameter to store the exit status of a command to a variable. The exit status is…

Zsh, or the Z Shell, is a powerful and flexible command-line shell for Unix-like operating systems, including Linux and macOS. It offers many features and improvements over the default bash shell, including improved command completion, spelling correction, and customizable prompts. In this article, we will learn how to install and configure Zsh on Ubuntu and Debian systems. Some of the benefits of using ZSH over Bash include: Enhanced command completion: ZSH offers better command completion than Bash, including the ability to use tab completion for options and arguments. More powerful globbing: ZSH has a more powerful globbing (wildcard matching) system…

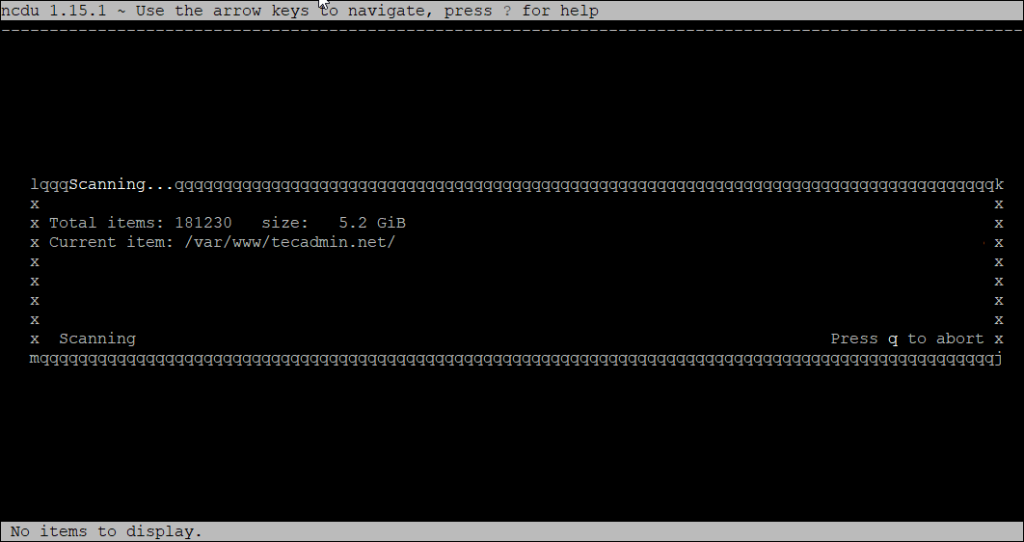

On Linux, you may want to find large files or directories that are taking up too much space. This guide will show you simple steps to search for these large files and directories using terminal commands. Using the du Command The du command (disk usage) is useful for checking the size of files and directories. Here’s how to use it: Open the Terminal: Open the terminal window on your Linux system. Search for Large Directories: To check which directories are taking up the most space, use the following command: du -h –max-depth=1 /path/to/directory Example: du -h –max-depth=1 /home/user This command…