Having been around for some time now, Hadoop has become one of the most popular open-source big data solutions. It processes data in batches and is famous for its scalable, cost-effective, and distributed computing capabilities. It’s one of the most popular open-source frameworks in the data analysis and storage space. As a user, you can use it to manage your data, analyze that data, and store it again – all in an automated way. With Hadoop installed on your Fedora system, you can access important analytic services with ease.

This article covers how to install Apache Hadoop on CentOS and Fedora systems. In this article, we’ll show you how to install Apache Hadoop on Fedora for local usage as well as a production server.

1. Prerequsities

Java is the primary requirement for running Hadoop on any system, So make sure you have Java installed on your system using the following command. If you don’t have Java installed on your system, use one of the following links to install it first.

2. Create Hadoop User

We recommend creating a normal (nor root) account for Hadoop working. To create an account using the following command.

adduser hadoop passwd hadoop

After creating the account, it also required to set up key-based ssh to its own account. To do this use execute following commands.

su - hadoop ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys chmod 0600 ~/.ssh/authorized_keys

Let’s verify key based login. Below command should not ask for the password but the first time it will prompt for adding RSA to the list of known hosts.

ssh localhost exit

3. Download Hadoop 3.1 Archive

In this step, download hadoop 3.1 source archive file using below command. You can also select alternate download mirror for increasing download speed.

cd ~ wget http://www-eu.apache.org/dist/hadoop/common/hadoop-3.1.0/hadoop-3.1.0.tar.gz tar xzf hadoop-3.1.0.tar.gz mv hadoop-3.1.0 hadoop

4. Setup Hadoop Pseudo-Distributed Mode

4.1. Setup Hadoop Environment Variables

First, we need to set environment variable uses by Hadoop. Edit ~/.bashrc file and append following values at end of file.

export HADOOP_HOME=/home/hadoop/hadoop export HADOOP_INSTALL=$HADOOP_HOME export HADOOP_MAPRED_HOME=$HADOOP_HOME export HADOOP_COMMON_HOME=$HADOOP_HOME export HADOOP_HDFS_HOME=$HADOOP_HOME export YARN_HOME=$HADOOP_HOME export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native export PATH=$PATH:$HADOOP_HOME/sbin:$HADOOP_HOME/bin

Now apply the changes in the current running environment

source ~/.bashrc

Now edit $HADOOP_HOME/etc/hadoop/hadoop-env.sh file and set JAVA_HOME environment variable. Change the JAVA path as per install on your system. This path may vary as per your operating system version and installation source. So make sure you are using correct path.

export JAVA_HOME=/usr/lib/jvm/java-8-oracle

4.2. Setup Hadoop Configuration Files

Hadoop has many of configuration files, which need to configure as per requirements of your Hadoop infrastructure. Let’s start with the configuration with basic Hadoop single node cluster setup. first, navigate to below location

cd $HADOOP_HOME/etc/hadoop

Edit core-site.xml

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>

Edit hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.name.dir</name>

<value>file:///home/hadoop/hadoopdata/hdfs/namenode</value>

</property>

<property>

<name>dfs.data.dir</name>

<value>file:///home/hadoop/hadoopdata/hdfs/datanode</value>

</property>

</configuration>

Edit mapred-site.xml

<configuration> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> </configuration>

Edit yarn-site.xml

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

4.3. Format Namenode

Now format the namenode using the following command, make sure that Storage directory is

hdfs namenode -format

Sample output:

WARNING: /home/hadoop/hadoop/logs does not exist. Creating. 2018-05-02 17:52:09,678 INFO namenode.NameNode: STARTUP_MSG: /************************************************************ STARTUP_MSG: Starting NameNode STARTUP_MSG: host = tecadmin/127.0.1.1 STARTUP_MSG: args = [-format] STARTUP_MSG: version = 3.1.0 ... ... ... 2018-05-02 17:52:13,717 INFO common.Storage: Storage directory /home/hadoop/hadoopdata/hdfs/namenode has been successfully formatted. 2018-05-02 17:52:13,806 INFO namenode.FSImageFormatProtobuf: Saving image file /home/hadoop/hadoopdata/hdfs/namenode/current/fsimage.ckpt_0000000000000000000 using no compression 2018-05-02 17:52:14,161 INFO namenode.FSImageFormatProtobuf: Image file /home/hadoop/hadoopdata/hdfs/namenode/current/fsimage.ckpt_0000000000000000000 of size 391 bytes saved in 0 seconds . 2018-05-02 17:52:14,224 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0 2018-05-02 17:52:14,282 INFO namenode.NameNode: SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down NameNode at tecadmin/127.0.1.1 ************************************************************/

5. Start Hadoop Cluster

Let’s start your Hadoop cluster using the scripts provides by Hadoop. Just navigate to your $HADOOP_HOME/sbin directory and execute scripts one by one.

cd $HADOOP_HOME/sbin/

Now run start-dfs.sh script.

./start-dfs.sh

Sample output:

Starting namenodes on [localhost] Starting datanodes Starting secondary namenodes [tecadmin] 2018-05-02 18:00:32,565 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Now run start-yarn.sh script.

./start-yarn.sh

Sample output:

Starting resourcemanager Starting nodemanagers

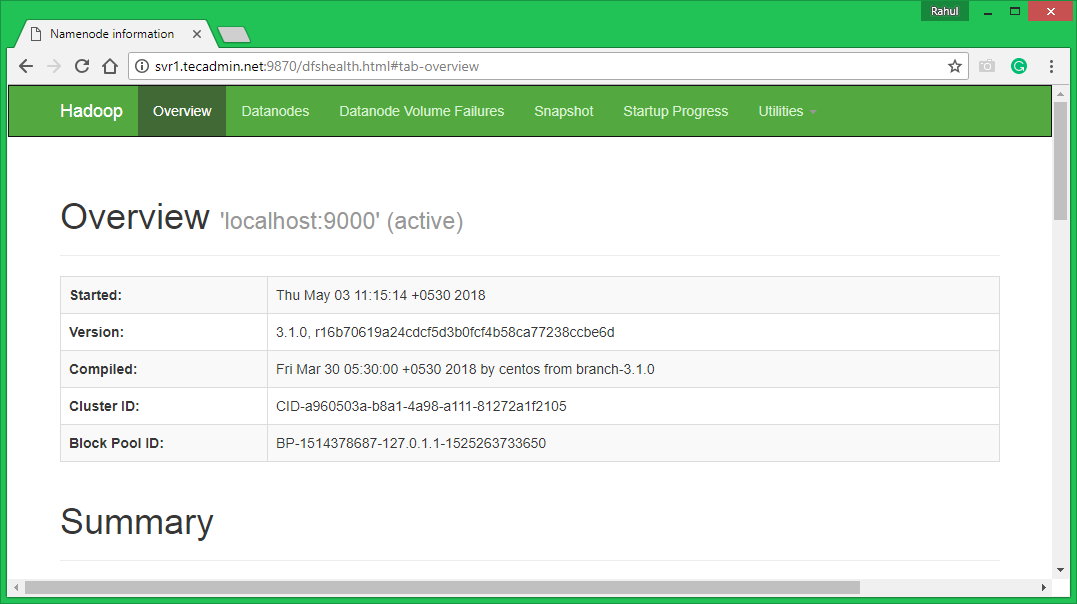

6. Access Hadoop Services in Browser

Hadoop NameNode started on port 9870 default. Access your server on port 9870 in your favorite web browser.

http://svr1.tecadmin.net:9870/

Now access port 8042 for getting the information about the cluster and all applications

http://svr1.tecadmin.net:8042/

Access port 9864 to get details about your Hadoop node.

http://svr1.tecadmin.net:9864/

7. Test Hadoop Single Node Setup

7.1. Make the HDFS directories required using following commands.

bin/hdfs dfs -mkdir /user bin/hdfs dfs -mkdir /user/hadoop

7.2. Copy all files from local file system /var/log/httpd to hadoop distributed file system using below command

bin/hdfs dfs -put /var/log/apache2 logs

7.3. Browse Hadoop distributed file system by opening below URL in the browser. You will see an apache2 folder in the list. Click on the folder name to open and you will find all log files there.

http://svr1.tecadmin.net:9870/explorer.html#/user/hadoop/logs/

7.4 – Now copy logs directory for hadoop distributed file system to local file system.

bin/hdfs dfs -get logs /tmp/logs ls -l /tmp/logs/

You can also check this tutorial to run wordcount mapreduce job example using command line.

103 Comments

Out of so many i tried this one worked .. thanks a ton ..

i m getting this error : Error: Cannot find configuration directory: /etc/hadoop . any ideas ?

HI, I follow your tutorial and its very nice and helpful for me but I am stuck at its accessing in browser.

I am done till Start Hadoop Cluster.

Starting namenodes on [localhost]

Starting datanodes

Starting secondary namenodes [hadoop-server]

2018-12-24 11:32:40,637 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform… using builtin-java classes where applicable

Starting resourcemanager

Starting nodemanagers

As you can see its starting but still couldn’t access in browser.

I’ll very thankful if someone help me in this.

Thanks

Thanks. This post is helpful in the datanode and namenode configuration which is missing in the guide of hadoop.

Hi Rahul

I have received an error

WARNING: log4j.properties is not found. HADOOP_CONF_DIR may be incomplete.

Could you please help out me

Thanks,

Surya

Hello teacher ~!

How can I deal this under the error ?

WARNING: log4j.properties is not found. HADOOP_CONF_DIR may be incomplete.

ERROR: Invalid HADOOP_YARN_HOME

bin/hdfs dfs -mkdir /user

When I run this, it says

-su: bin/hdfs: No such file or directory

Please help

For the steps showing here, does it work on Red Hat Linux 7.5?

I have tried. But not able to make it work. Here is the error:

[hadoop@ol75 hadoop]$ hdfs namenode -format

2018-05-25 23:59:16,727 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = java.net.UnknownHostException: unallocated.barefruit.co.uk: unallocated.barefruit.co.uk: Name or service not known

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 3.1.0

STARTUP_MSG: classpath = ……

Thank you in advance.

David

It looks your system hostname is “unallocated.barefruit.co.uk”, which is not resolving. Edit /etc/hosts file bind hostname with system ip.

127.0.0.1 unallocated.barefruit.co.uk

PROBLEM WITH 7.2 –

I finished most of this tutorial but am now stuck on finding var/log/apache2

– after running a few commands to search my system for apache2, I don’t get back any file or directory called apache2. Does anyone have any insight on this?

Hi Rahul,

This link is not working,

wget http://www-eu.apache.org/dist/hadoop/common/hadoop-2.8.0/hadoop-2.8.0.tar.gz

pls check

Hi Hema, Thanks for pointing out.

This tutorial has been updated to the latest version of Hadoop installation.

Dear Mr Rahul,

Thank for your extended support on hadoop. Here i have small doubt “how to share single node hadoop cluster ” for a number of 60 clinets. Is there any possibility to do so. Please help

Regards

Dinakar N K

hi…..

Good Article…Thanks..:)

The information given is incomplete need to export more variables.

Hi Rahul,

This is just awesome!!! I have had some issues during installation but your guide to installing a hadoop cluster is just great !!

I still used the vi editor for editing the .bashrc file and all other xml files. Just the lack of linux editor knowledge I did it. I used the 127.0.0.1 instead of localhost as the alias names are not getting resolved.

Moreover there are some issues like the one below

16/02/22 03:05:25 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform… using builtin-java classes where applicable

[hadoop@localhost hadoop]$ sudo apt-get install openssh-client

sudo: apt-get: command not found

The above are the two issues that I’m facing but other than that… it has been a “Walk in the Park” with this tutorial

Thanks Buddy!!

Shankar

Do you have some idea ?

16/01/26 09:17:09 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform… using builtin-java classes where applicable

Starting namenodes on [localhost]

localhost: /home/hadoop/hadoop/sbin/slaves.sh: line 60: ssh: command not found

localhost: /home/hadoop/hadoop/sbin/slaves.sh: line 60: ssh: command not found

Starting secondary namenodes [0.0.0.0]

0.0.0.0: /home/hadoop/hadoop/sbin/slaves.sh: line 60: ssh: command not found

16/01/26 09:17:14 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform… using builtin-java classes where applicable

Hi Sergey,

It looks you don’t have openssh-client package installed. Please install it.

$ sudo apt-get install openssh-client

hi rahul i followed your setup here but unfortunately i ended up some errors like the one below.

[hadoop@localhost hadoop-2.8.0]$ bin/hdfs namenode -format

Error: Could not find or load main class org.apache.hadoop.hdfs.server.namenode.NameNode

appreciate some help…thanks

Hi rahul,

good job,

Now expend a bit more and next time show integration of spark and flume.

If you need help ping me and I will provide you with my additions to your config …

Regards,

Can anybody can tell me where is bin/hdfs in Step 7 please ?

For me it says “Not a directory”

This can be misleading. What it is saying is from the bin folder within the hadoop system folder.

$ cd $HADOOP_HOME/bin

$ hdfs dfs -mkdir /user

$ hdfs dfs -mkdir/user/hadoop

bash: /home/hadoop/.bashrc: Permission denied

I guess that the CentOS 7/Hadoop 2.7.1 tutorial was very helpful until step 4, when by some reason the instructions just explained what to do with .bashrc without explaining how to get to it and how to edit it in first place. Thanks anyway, I just need to find a tutorial that explains with detail how to set up Hadoop.

Thanks. I read your article have successfully deployed my first single node hadoop deployment despite series of unsuccessful attempts in the past. thanks

Hi Rahul,

Please help me! I installed follow your guide. when i run jps that result below:

18118 Jps

18068 TaskTracker

17948 JobTracker

17861 SecondaryNameNode

17746 DataNode

however when I run stop-all.sh command that

no jobtracker to stop

localhost: no tasktracker to stop

no namenode to stop

localhost: no datanode to stop

localhost: no secondarynamenode to stop

Can you explan for me? Thanks so much!

Hai Rahul,

Can u plz tell me how to configure multiple datanodes on a single machine.I am using hadoop2.5

HI Rahul,

I am not able to start the hadoop services..getting an error like>

14/07/24 23:11:27 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at localhost.localdomain/127.0.0.1

************************************************************/

hadoop@localhost hadoop]$ bin/start-all.sh

bash: bin/start-all.sh: No such file or directory

Hi Rahul,

When I execute the command $ bin/start-all.sh, I am getting the FATAL ERROR as shown below

–====

[hadoop@localhost hadoop]$ sbin/start-all.sh

This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh

[Fatal Error] core-site.xml:1:1: Content is not allowed in prolog.

14/06/10 01:16:14 FATAL conf.Configuration: error parsing conf core-site.xml

.

.

.

.

tarting namenodes on []

localhost: starting namenode, logging to /opt/hadoop/hadoop/logs/hadoop-hadoop-namenode-localhost.localdomain.out

localhost: [Fatal Error] core-site.xml:1:1: Content is not allowed in prolog.

localhost: starting datanode, logging to /opt/hadoop/hadoop/logs/hadoop-hadoop-datanode-localhost.localdomain.out

localhost: [Fatal Error] core-site.xml:1:1: Content is not allowed in prolog.

[Fatal Error] core-site.xml:1:1: Content is not allowed in prolog.

14/06/10 01:16:25 FATAL conf.Configuration: error parsing conf core-site.xml

org.xml.sax.SAXParseException; systemId: file:/opt/hadoop/hadoop/etc/hadoop/core-site.xml; lineNumber: 1; columnNumber: 1; Content is not allowed in prolog.

.

.

.

starting yarn daemons

starting resourcemanager, logging to /opt/hadoop/hadoop/logs/yarn-hadoop-resourcemanager-localhost.localdomain.out

[Fatal Error] core-site.xml:1:1: Content is not allowed in prolog.

localhost: starting nodemanager, logging to /opt/hadoop/hadoop/logs/yarn-hadoop-nodemanager-localhost.localdomain.out

localhost: [Fatal Error] core-site.xml:1:1: Content is not allowed in prolog.

–===

where core-site.xml edited like this

$vim core-site.xml

fs.default.name

hdfs://localhost:9000/

dfs.permissions

false

#########################################

please help me to overcome this error.

Thanks a lot.

I made a few modifications but the the instructions are on the money!

If you get

WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform… using builtin-java classes where applicable use the instructions from below

http://stackoverflow.com/questions/20011252/hadoop-2-2-0-64-bit-installing-but-cannot-start

Hi,

Your artcile is simply super, I followed each and every step and installed hadoop.

my suggestion is: while checking status with “jps”, it was not showing information and I got no such command. I used again export JAVA_HOME stuff. after that JPS working.

I guess.. these export JAVA_HOME also need to include in some where else.

Thansk alot for your article buddy

Hi Rahul,

Can you please post some direction to test with the input/output data after installation?

for example: how can we upload a file to run a simple wordcount and get the output… ?

Regards,

Thang Nguyen

fs.default.name

hdfs://localhost:9000/

dfs.permissions

false

Hi

I could not find file content properly. Can you email me at rahul.kumar1099@gmail.com.

hi rahul,

Plz help me for following case

[FATAL ERROR] core-site.xml:10:2: The markup in the document following the root element must be well-formed.

error while running “bin/start-all.sh” on all *.xml files

Hi,

Please provide your core-site.xml file content

localhost: [fatal Error] core-site.xml:10:2: The markup in the document following the root element must be well-formed.

error while running “bin/start-all.sh”

Hi Rahul,

jps 0r $JAVA_HOME/bin/jps giving error bash:jps: command not found and bash: /bin/jps: no such file or directory….. Kindly address the error…

HI Elavarasa,

Please make sure you have setup JAVA_HOME environment variable. Please provide output of following commands.

# env | grep JAVA_HOME

# echo $JAVA_HOME

Hi Rahul,

I have successfully done the installation of single node cluster and able to see all daemons running.

But i am not able to run hadoop fs commands , for this should i install any thing else like jars??

Thanks in advance…

How do we add data to the single node cluster.

Hi Rahul,

Do you know what is the best site to download Pig and hive? I realized that I am unable to run and pig and hive. I thought it comes with the package just like while setting-up under cloudera.

Hi Rahul,

I just restarted the hadoop and JPS is working fine.

Hi Rahul,

jps is not showing under $JAVA_HOME/bin

It comes out with an error no such file or directory

Also, once I complete my tasks and comes out of linux, do I need to restart the hadoop?

Hi Rahul,

Your instructions are superp. The only issue I am facing is a “jps” not getting recognized…. Not sure where the issue could be. Otherwise all set to go.

Hi Raj,

Try following command for jps.

$JAVA_HOME/bin/jps

[hadoop@RHEL hadoop]$ bin/hadoop namenode -format

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

14/02/03 03:38:23 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = java.net.UnknownHostException: RHEL: RHEL

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 2.2.0

STARTUP_MSG: classpath = /opt/hadoop/hadoop/etc/hadoop:/opt/hadoop/hadoop/share/hadoop/common/lib/jsr305-1.3.9.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/servlet-api-2.5.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/commons-codec-1.4.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/jets3t-0.6.1.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/commons-net-3.1.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/commons-digester-1.8.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/jsch-0.1.42.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/log4j-1.2.17.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/activation-1.1.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/jackson-jaxrs-1.8.8.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/jackson-mapper-asl-1.8.8.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/jetty-util-6.1.26.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/paranamer-2.3.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/jettison-1.1.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/jasper-runtime-5.5.23.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/commons-collections-3.2.1.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/slf4j-api-1.7.5.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/snappy-java-1.0.4.1.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/asm-3.2.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/commons-beanutils-1.7.0.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/jackson-core-asl-1.8.8.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/jsp-api-2.1.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/stax-api-1.0.1.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/jersey-json-1.9.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/avro-1.7.4.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/commons-configuration-1.6.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/commons-math-2.1.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/jasper-compiler-5.5.23.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/netty-3.6.2.Final.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/commons-cli-1.2.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/jackson-xc-1.8.8.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/commons-beanutils-core-1.8.0.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/commons-lang-2.5.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/commons-httpclient-3.1.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/commons-logging-1.1.1.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/commons-io-2.1.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/commons-el-1.0.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/jersey-core-1.9.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/xmlenc-0.52.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/hadoop-annotations-2.2.0.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/junit-4.8.2.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/jersey-server-1.9.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/zookeeper-3.4.5.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/commons-compress-1.4.1.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/jetty-6.1.26.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/hadoop-auth-2.2.0.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/jaxb-api-2.2.2.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/xz-1.0.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/mockito-all-1.8.5.jar:/opt/hadoop/hadoop/share/hadoop/common/lib/guava-11.0.2.jar:/opt/hadoop/hadoop/share/hadoop/common/hadoop-common-2.2.0.jar:/opt/hadoop/hadoop/share/hadoop/common/hadoop-nfs-2.2.0.jar:/opt/hadoop/hadoop/share/hadoop/common/hadoop-common-2.2.0-tests.jar:/opt/hadoop/hadoop/share/hadoop/hdfs:/opt/hadoop/hadoop/share/hadoop/hdfs/lib/jsr305-1.3.9.jar:/opt/hadoop/hadoop/share/hadoop/hdfs/lib/servlet-api-2.5.jar:/opt/hadoop/hadoop/share/hadoop/hdfs/lib/commons-codec-1.4.jar:/opt/hadoop/hadoop/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/opt/hadoop/hadoop/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/opt/hadoop/hadoop/share/hadoop/hdfs/lib/jackson-mapper-asl-1.8.8.jar:/opt/hadoop/hadoop/share/hadoop/hdfs/lib/jetty-util-6.1.26.jar:/opt/hadoop/hadoop/share/hadoop/hdfs/lib/jasper-runtime-5.5.23.jar:/opt/hadoop/hadoop/share/hadoop/hdfs/lib/asm-3.2.jar:/opt/hadoop/hadoop/share/hadoop/hdfs/lib/jackson-core-asl-1.8.8.jar:/opt/hadoop/hadoop/share/hadoop/hdfs/lib/jsp-api-2.1.jar:/opt/hadoop/hadoop/share/hadoop/hdfs/lib/netty-3.6.2.Final.jar:/opt/hadoop/hadoop/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/opt/hadoop/hadoop/share/hadoop/hdfs/lib/commons-lang-2.5.jar:/opt/hadoop/hadoop/share/hadoop/hdfs/lib/commons-logging-1.1.1.jar:/opt/hadoop/hadoop/share/hadoop/hdfs/lib/commons-io-2.1.jar:/opt/hadoop/hadoop/share/hadoop/hdfs/lib/commons-el-1.0.jar:/opt/hadoop/hadoop/share/hadoop/hdfs/lib/jersey-core-1.9.jar:/opt/hadoop/hadoop/share/hadoop/hdfs/lib/xmlenc-0.52.jar:/opt/hadoop/hadoop/share/hadoop/hdfs/lib/jersey-server-1.9.jar:/opt/hadoop/hadoop/share/hadoop/hdfs/lib/jetty-6.1.26.jar:/opt/hadoop/hadoop/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/opt/hadoop/hadoop/share/hadoop/hdfs/lib/guava-11.0.2.jar:/opt/hadoop/hadoop/share/hadoop/hdfs/hadoop-hdfs-nfs-2.2.0.jar:/opt/hadoop/hadoop/share/hadoop/hdfs/hadoop-hdfs-2.2.0.jar:/opt/hadoop/hadoop/share/hadoop/hdfs/hadoop-hdfs-2.2.0-tests.jar:/opt/hadoop/hadoop/share/hadoop/yarn/lib/aopalliance-1.0.jar:/opt/hadoop/hadoop/share/hadoop/yarn/lib/log4j-1.2.17.jar:/opt/hadoop/hadoop/share/hadoop/yarn/lib/jackson-mapper-asl-1.8.8.jar:/opt/hadoop/hadoop/share/hadoop/yarn/lib/junit-4.10.jar:/opt/hadoop/hadoop/share/hadoop/yarn/lib/paranamer-2.3.jar:/opt/hadoop/hadoop/share/hadoop/yarn/lib/hamcrest-core-1.1.jar:/opt/hadoop/hadoop/share/hadoop/yarn/lib/snappy-java-1.0.4.1.jar:/opt/hadoop/hadoop/share/hadoop/yarn/lib/asm-3.2.jar:/opt/hadoop/hadoop/share/hadoop/yarn/lib/jackson-core-asl-1.8.8.jar:/opt/hadoop/hadoop/share/hadoop/yarn/lib/avro-1.7.4.jar:/opt/hadoop/hadoop/share/hadoop/yarn/lib/javax.inject-1.jar:/opt/hadoop/hadoop/share/hadoop/yarn/lib/netty-3.6.2.Final.jar:/opt/hadoop/hadoop/share/hadoop/yarn/lib/jersey-guice-1.9.jar:/opt/hadoop/hadoop/share/hadoop/yarn/lib/guice-3.0.jar:/opt/hadoop/hadoop/share/hadoop/yarn/lib/commons-io-2.1.jar:/opt/hadoop/hadoop/share/hadoop/yarn/lib/jersey-core-1.9.jar:/opt/hadoop/hadoop/share/hadoop/yarn/lib/hadoop-annotations-2.2.0.jar:/opt/hadoop/hadoop/share/hadoop/yarn/lib/guice-servlet-3.0.jar:/opt/hadoop/hadoop/share/hadoop/yarn/lib/jersey-server-1.9.jar:/opt/hadoop/hadoop/share/hadoop/yarn/lib/commons-compress-1.4.1.jar:/opt/hadoop/hadoop/share/hadoop/yarn/lib/xz-1.0.jar:/opt/hadoop/hadoop/share/hadoop/yarn/lib/protobuf-java-2.5.0.jar:/opt/hadoop/hadoop/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-2.2.0.jar:/opt/hadoop/hadoop/share/hadoop/yarn/hadoop-yarn-server-tests-2.2.0.jar:/opt/hadoop/hadoop/share/hadoop/yarn/hadoop-yarn-api-2.2.0.jar:/opt/hadoop/hadoop/share/hadoop/yarn/hadoop-yarn-server-common-2.2.0.jar:/opt/hadoop/hadoop/share/hadoop/yarn/hadoop-yarn-client-2.2.0.jar:/opt/hadoop/hadoop/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-2.2.0.jar:/opt/hadoop/hadoop/share/hadoop/yarn/hadoop-yarn-site-2.2.0.jar:/opt/hadoop/hadoop/share/hadoop/yarn/hadoop-yarn-server-web-proxy-2.2.0.jar:/opt/hadoop/hadoop/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-2.2.0.jar:/opt/hadoop/hadoop/share/hadoop/yarn/hadoop-yarn-server-nodemanager-2.2.0.jar:/opt/hadoop/hadoop/share/hadoop/yarn/hadoop-yarn-common-2.2.0.jar:/opt/hadoop/hadoop/share/hadoop/mapreduce/lib/aopalliance-1.0.jar:/opt/hadoop/hadoop/share/hadoop/mapreduce/lib/log4j-1.2.17.jar:/opt/hadoop/hadoop/share/hadoop/mapreduce/lib/jackson-mapper-asl-1.8.8.jar:/opt/hadoop/hadoop/share/hadoop/mapreduce/lib/junit-4.10.jar:/opt/hadoop/hadoop/share/hadoop/mapreduce/lib/paranamer-2.3.jar:/opt/hadoop/hadoop/share/hadoop/mapreduce/lib/hamcrest-core-1.1.jar:/opt/hadoop/hadoop/share/hadoop/mapreduce/lib/snappy-java-1.0.4.1.jar:/opt/hadoop/hadoop/share/hadoop/mapreduce/lib/asm-3.2.jar:/opt/hadoop/hadoop/share/hadoop/mapreduce/lib/jackson-core-asl-1.8.8.jar:/opt/hadoop/hadoop/share/hadoop/mapreduce/lib/avro-1.7.4.jar:/opt/hadoop/hadoop/share/hadoop/mapreduce/lib/javax.

inject-1.jar:/opt/hadoop/hadoop/share/hadoop/mapreduce/lib/netty-3.6.2.Final.jar:/opt/hadoop/hadoop/share/hadoop/mapreduce/lib/jersey-guice-1.9.jar:/opt/hadoop/hadoop/share/hadoop/mapreduce/lib/guice-3.0.jar:/opt/hadoop/hadoop/share/hadoop/mapreduce/lib/commons-io-2.1.jar:/opt/hadoop/hadoop/share/hadoop/mapreduce/lib/jersey-core-1.9.jar:/opt/hadoop/hadoop/share/hadoop/mapreduce/lib/hadoop-annotations-2.2.0.jar:/opt/hadoop/hadoop/share/hadoop/mapreduce/lib/guice-servlet-3.0.jar:/opt/hadoop/hadoop/share/hadoop/mapreduce/lib/jersey-server-1.9.jar:/opt/hadoop/hadoop/share/hadoop/mapreduce/lib/commons-compress-1.4.1.jar:/opt/hadoop/hadoop/share/hadoop/mapreduce/lib/xz-1.0.jar:/opt/hadoop/hadoop/share/hadoop/mapreduce/lib/protobuf-java-2.5.0.jar:/opt/hadoop/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-2.2.0.jar:/opt/hadoop/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-common-2.2.0.jar:/opt/hadoop/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.2.0.jar:/opt/hadoop/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-2.2.0.jar:/opt/hadoop/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.2.0-tests.jar:/opt/hadoop/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-core-2.2.0.jar:/opt/hadoop/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-app-2.2.0.jar:/opt/hadoop/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.2.0.jar:/opt/hadoop/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-2.2.0.jar:/contrib/capacity-scheduler/*.jar:/contrib/capacity-scheduler/*.jar

STARTUP_MSG: build = https://svn.apache.org/repos/asf/hadoop/common -r 1529768; compiled by ‘hortonmu’ on 2013-10-07T06:28Z

STARTUP_MSG: java = 1.6.0_20

************************************************************/

14/02/03 03:38:23 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

14/02/03 03:38:24 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform… using builtin-java classes where applicable

14/02/03 03:38:25 WARN common.Util: Path /opt/hadoop/hadoop/dfs/name should be specified as a URI in configuration files. Please update hdfs configuration.

14/02/03 03:38:25 WARN common.Util: Path /opt/hadoop/hadoop/dfs/name should be specified as a URI in configuration files. Please update hdfs configuration.

Formatting using clusterid: CID-c4662030-0d86-4263-aed9-043fd34565cd

14/02/03 03:38:25 INFO namenode.HostFileManager: read includes:

HostSet(

)

14/02/03 03:38:25 INFO namenode.HostFileManager: read excludes:

HostSet(

)

14/02/03 03:38:25 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit=1000

14/02/03 03:38:25 INFO util.GSet: Computing capacity for map BlocksMap

14/02/03 03:38:25 INFO util.GSet: VM type = 64-bit

14/02/03 03:38:25 INFO util.GSet: 2.0% max memory = 966.7 MB

14/02/03 03:38:25 INFO util.GSet: capacity = 2^21 = 2097152 entries

14/02/03 03:38:25 INFO blockmanagement.BlockManager: dfs.block.access.token.enable=false

14/02/03 03:38:25 INFO blockmanagement.BlockManager: defaultReplication = 2

14/02/03 03:38:25 INFO blockmanagement.BlockManager: maxReplication = 512

14/02/03 03:38:25 INFO blockmanagement.BlockManager: minReplication = 1

14/02/03 03:38:25 INFO blockmanagement.BlockManager: maxReplicationStreams = 2

14/02/03 03:38:25 INFO blockmanagement.BlockManager: shouldCheckForEnoughRacks = false

14/02/03 03:38:25 INFO blockmanagement.BlockManager: replicationRecheckInterval = 3000

14/02/03 03:38:25 INFO blockmanagement.BlockManager: encryptDataTransfer = false

14/02/03 03:38:25 INFO namenode.FSNamesystem: fsOwner = hadoop (auth:SIMPLE)

14/02/03 03:38:25 INFO namenode.FSNamesystem: supergroup = supergroup

14/02/03 03:38:25 INFO namenode.FSNamesystem: isPermissionEnabled = true

14/02/03 03:38:25 INFO namenode.FSNamesystem: HA Enabled: false

14/02/03 03:38:25 INFO namenode.FSNamesystem: Append Enabled: true

14/02/03 03:38:25 INFO util.GSet: Computing capacity for map INodeMap

14/02/03 03:38:25 INFO util.GSet: VM type = 64-bit

14/02/03 03:38:25 INFO util.GSet: 1.0% max memory = 966.7 MB

14/02/03 03:38:25 INFO util.GSet: capacity = 2^20 = 1048576 entries

14/02/03 03:38:25 INFO namenode.NameNode: Caching file names occuring more than 10 times

14/02/03 03:38:25 INFO namenode.FSNamesystem: dfs.namenode.safemode.threshold-pct = 0.9990000128746033

14/02/03 03:38:25 INFO namenode.FSNamesystem: dfs.namenode.safemode.min.datanodes = 0

14/02/03 03:38:25 INFO namenode.FSNamesystem: dfs.namenode.safemode.extension = 30000

14/02/03 03:38:25 INFO namenode.FSNamesystem: Retry cache on namenode is enabled

14/02/03 03:38:25 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis

14/02/03 03:38:25 INFO util.GSet: Computing capacity for map Namenode Retry Cache

14/02/03 03:38:25 INFO util.GSet: VM type = 64-bit

14/02/03 03:38:25 INFO util.GSet: 0.029999999329447746% max memory = 966.7 MB

14/02/03 03:38:25 INFO util.GSet: capacity = 2^15 = 32768 entries

Re-format filesystem in Storage Directory /opt/hadoop/hadoop/dfs/name ? (Y or N) y

14/02/03 03:38:29 WARN net.DNS: Unable to determine local hostname -falling back to “localhost”

java.net.UnknownHostException: RHEL: RHEL

at java.net.InetAddress.getLocalHost(InetAddress.java:1426)

at org.apache.hadoop.net.DNS.resolveLocalHostname(DNS.java:264)

at org.apache.hadoop.net.DNS.(DNS.java:57)

at org.apache.hadoop.hdfs.server.namenode.NNStorage.newBlockPoolID(NNStorage.java:914)

at org.apache.hadoop.hdfs.server.namenode.NNStorage.newNamespaceInfo(NNStorage.java:550)

at org.apache.hadoop.hdfs.server.namenode.FSImage.format(FSImage.java:144)

at org.apache.hadoop.hdfs.server.namenode.NameNode.format(NameNode.java:837)

at org.apache.hadoop.hdfs.server.namenode.NameNode.createNameNode(NameNode.java:1213)

at org.apache.hadoop.hdfs.server.namenode.NameNode.main(NameNode.java:1320)

14/02/03 03:38:29 WARN net.DNS: Unable to determine address of the host-falling back to “localhost” address

java.net.UnknownHostException: RHEL: RHEL

at java.net.InetAddress.getLocalHost(InetAddress.java:1426)

at org.apache.hadoop.net.DNS.resolveLocalHostIPAddress(DNS.java:287)

at org.apache.hadoop.net.DNS.(DNS.java:58)

at org.apache.hadoop.hdfs.server.namenode.NNStorage.newBlockPoolID(NNStorage.java:914)

at org.apache.hadoop.hdfs.server.namenode.NNStorage.newNamespaceInfo(NNStorage.java:550)

at org.apache.hadoop.hdfs.server.namenode.FSImage.format(FSImage.java:144)

at org.apache.hadoop.hdfs.server.namenode.NameNode.format(NameNode.java:837)

at org.apache.hadoop.hdfs.server.namenode.NameNode.createNameNode(NameNode.java:1213)

at org.apache.hadoop.hdfs.server.namenode.NameNode.main(NameNode.java:1320)

14/02/03 03:38:29 INFO common.Storage: Storage directory /opt/hadoop/hadoop/dfs/name has been successfully formatted.

14/02/03 03:38:29 INFO namenode.FSImage: Saving image file /opt/hadoop/hadoop/dfs/name/current/fsimage.ckpt_0000000000000000000 using no compression

14/02/03 03:38:29 INFO namenode.FSImage: Image file /opt/hadoop/hadoop/dfs/name/current/fsimage.ckpt_0000000000000000000 of size 198 bytes saved in 0 seconds.

14/02/03 03:38:29 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

14/02/03 03:38:30 INFO util.ExitUtil: Exiting with status 0

14/02/03 03:38:30 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at java.net.UnknownHostException: RHEL: RHEL

************************************************************/

[hadoop@RHEL hadoop]$

hosts file:

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

RHEL

please help

Hi Javed,

Your systems hosts file entry looks incorrect. Please add entry like below

127.0.0.1 RHEL

Hi Rahul!

Just to say your instructions worked like a dream.

In my hadoop-env.sh I used.

export JAVA_HOME=/usr/lib/jvm/jre-1.6.0 <- might help others its a vanilla Centos 6.5 install.

Cheers Paul

This explanation is not less to any other I have seen so far… Great efforts Rahul, I ve personally installed psuedo and normal distributed clusters in my workplace using this tutorials.

Thanks alot buddy 🙂

Keep doing such good works 🙂

Thanks Sravan

hi sir rahul

i follow all your guide step by steps but encounter some problems while executing

this command:

./start-dfs.sh

output:

[root@localhost sbin]# ./start-dfs.sh

17/04/05 22:14:32 WARN conf.Configuration: bad conf file: element not

17/04/05 22:14:32 WARN conf.Configuration: bad conf file: element not

17/04/05 22:14:33 WARN conf.Configuration: bad conf file: element not

17/04/05 22:14:33 WARN conf.Configuration: bad conf file: element not

17/04/05 22:14:33 WARN conf.Configuration: bad conf file: element not

17/04/05 22:14:33 WARN conf.Configuration: bad conf file: element not

17/04/05 22:14:33 WARN conf.Configuration: bad conf file: element not

17/04/05 22:14:33 WARN conf.Configuration: bad conf file: element not

17/04/05 22:14:33 WARN conf.Configuration: bad conf file: element not

17/04/05 22:14:33 WARN conf.Configuration: bad conf file: element not

thanks

I am getting following below error when I run “bin/start-all.sh” Please suggest

hadoop@localhost hadoop]$ bin/start-all.sh

/opt/hadoop/hadoop/libexec/../conf/hadoop-env.sh: line 1: i#: command not found

/opt/hadoop/hadoop/libexec/../conf/hadoop-env.sh: line 1: i#: command not found

/opt/hadoop/hadoop/libexec/../conf/hadoop-env.sh: line 1: i#: command not found

/opt/hadoop/hadoop/libexec/../conf/hadoop-env.sh: line 1: i#: command not found

starting namenode, logging to /opt/hadoop/hadoop/libexec/../logs/hadoop-hadoop-namenode-localhost.localdomain.out

/opt/hadoop/hadoop/libexec/../conf/hadoop-env.sh: line 1: i#: command not found

/opt/hadoop/hadoop/libexec/../conf/hadoop-env.sh: line 1: i#: command not found

/opt/hadoop/hadoop/libexec/../conf/hadoop-env.sh: line 1: i#: command not found

localhost: /opt/hadoop/hadoop/libexec/../conf/hadoop-env.sh: line 1: i#: command not found

localhost: /opt/hadoop/hadoop/libexec/../conf/hadoop-env.sh: line 1: i#: command not found

localhost: datanode running as process 3602. Stop it first.

/opt/hadoop/hadoop/libexec/../conf/hadoop-env.sh: line 1: i#: command not found

/opt/hadoop/hadoop/libexec/../conf/hadoop-env.sh: line 1: i#: command not found

localhost: /opt/hadoop/hadoop/libexec/../conf/hadoop-env.sh: line 1: i#: command not found

localhost: /opt/hadoop/hadoop/libexec/../conf/hadoop-env.sh: line 1: i#: command not found

localhost: secondarynamenode running as process 3715. Stop it first.

/opt/hadoop/hadoop/libexec/../conf/hadoop-env.sh: line 1: i#: command not found

/opt/hadoop/hadoop/libexec/../conf/hadoop-env.sh: line 1: i#: command not found

/opt/hadoop/hadoop/libexec/../conf/hadoop-env.sh: line 1: i#: command not found

jobtracker running as process 3793. Stop it first.

/opt/hadoop/hadoop/libexec/../conf/hadoop-env.sh: line 1: i#: command not found

/opt/hadoop/hadoop/libexec/../conf/hadoop-env.sh: line 1: i#: command not found

localhost: /opt/hadoop/hadoop/libexec/../conf/hadoop-env.sh: line 1: i#: command not found

localhost: /opt/hadoop/hadoop/libexec/../conf/hadoop-env.sh: line 1: i#: command not found

localhost: tasktracker running as process 3921. Stop it first.

[hadoop@localhost hadoop]$ jvs

-bash: jvs: command not found

Fixed these errors. Checked the logs and got the help from websites.

Have a question. How can I use/test the wordcount on multiple files in a folder.

bin/hadoop dfs -copyFromLocal files /testfiles

bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.2.0.jar wordcount /files /testfiles — This throws error saying testfiles is not a file. It should work for a directory too right ?

Thanks

oops. Spoke too soon. I do see the JobHistoryServer start – then it stopped it looks like.

-bash-3.2$ jps

21984 NameNode

27080 DataNode

1638 ResourceManager

1929 NodeManager

5718 JobHistoryServer

6278 Jps

-bash-3.2$ jps

21984 NameNode

27080 DataNode

11037 Jps

1638 ResourceManager

1929 NodeManager

Where can I see the information as to why this stopped? Can you please suggest. Sorry for multiple posts. But I had to update and dont seem to find any help googling.

Thanks

Hi

I tried changing the entry in core-site.xml

hdfs://drwdt001.myers.com:9000

instead of

hdfs://drwdt001:9000

and that helped with the startup of the HistoryServer. Now I can see it.

Although, now I am not sure if my datanode will restart. I had issues with that startup & thats the reason I removed the domain and just had the servername as the value.

Any ideas? I will test before I shut and restart

Thanks.

Hi

I downloaded and installed Apache hadoop 2.2 latest. Followed the above setup for single node ( First time setup.) RHEL 5.5

Name node, DataNode, ResourceManager, NodeManager started fine. Had some issue with datanode & had to update the IPtables for opening ports.

when I run

-bash-3.2$ sbin/mr-jobhistory-daemon.sh start historyserver

starting historyserver, logging to /hadoop/hadoop-2.2.0/logs/mapred-hduser-historyserver-server.out

when I run jps, I dont see the JobHistoryServer listed . There are no errors in the out file above.

Can someone please assist?

Thanks

JKH

Hello Rahul,

I need your help , my cluster does not work because it must have something wrong in the configuration .

because when I put start-all.sh it does not initialize the package secondarynamenode .

shows this error

starting secondarynamenode , logging to / opt/hadoop/hadoop-1.2.1/libexec/../logs/hadoop-hadoop-secondarynamenode-lbad012.out

lbad012 : Exception in thread “main ” java.lang.IllegalArgumentException : Does not contain a valid host : port authority : file :/ / /

lbad012 : at org.apache.hadoop.net.NetUtils.createSocketAddr ( NetUtils.java : 164 )

lbad012 : at org.apache.hadoop.hdfs.server.namenode.NameNode.getAddress ( NameNode.java : 212 )

lbad012 : at org.apache.hadoop.hdfs.server.namenode.NameNode.getAddress ( NameNode.java : 244 )

lbad012 : at org.apache.hadoop.hdfs.server.namenode.NameNode.getServiceAddress ( NameNode.java : 236 )

lbad012 : at org.apache.hadoop.hdfs.server.namenode.SecondaryNameNode.initialize ( SecondaryNameNode.java : 194 )

lbad012 : at org.apache.hadoop.hdfs.server.namenode.SecondaryNameNode . ( SecondaryNameNode.java : 150 )

lbad012 : at org.apache.hadoop.hdfs.server.namenode.SecondaryNameNode.main ( SecondaryNameNode.java : 676 )

heyy

please help.

wen i am doing the step 6 its asking ffor some password even though i havent set any password.please tell wat to do.

bin/start-all.sh

starting namenode, logging to /opt/hadoop/hadoop/libexec/../logs/hadoop-hadoop-namenode-demo.example.com.out

hadoop@localhost’s password:

Thanx

Hi Dheeraj,

I think you don’t have completed Step #3 properly. Please check and complete it. Make sure you are able to ssh without password.

I have configured cloudera on a single node successfully how ever when i lanuch Hue @//myaddress.8888 i see below error message at configuration level.

”

Configuration files located in /var/run/cloudera-scm-agent/process/57-hue-HUE_SERVER

Potential misconfiguration detected. Fix and restart Hue.”

and my hue_safety_valve.ini looks as below

[hadoop]

[[mapred_clusters]]

[[[default]]]

jobtracker_host=servername

thrift_port=9290

jobtracker_port=8021

submit_to=True

hadoop_mapred_home={{HADOOP_MR1_HOME}}

hadoop_bin={{HADOOP_BIN}}

hadoop_conf_dir={{HADOOP_CONF_DIR}}

security_enabled=false

Can you please suggest the steps to uninstall the apache hadoop . I am planning to test cloudera as well , if you can share steps for cloudera as well that would be awesome ! .

Please help me, when I run $ bin/start-all.sh, output have a error,

starting namenode, logging to /opt/hadoop/hadoop/libexec/../logs/hadoop-hadoop-namenode-hadoop-master.out

The authenticity of host ‘localhost (::1)’ can’t be established.

RSA key fingerprint is 7b:6d:cb:fc:48:7b:c6:42:a5:6a:64:83:ab:a8:95:95.

Are you sure you want to continue connecting (yes/no)? hadoop-slave-2: starting datanode, logging to /opt/hadoop/hadoop/libexec/../logs/hadoop-hadoop-datanode-hadoop-slave-2.out

hadoop-slave-1: starting datanode, logging to /opt/hadoop/hadoop/libexec/../logs/hadoop-hadoop-datanode-hadoop-slave-1.out

Hi Toan,

Are you configuring hadoop multinode cluster ?

I have found solutions for my issues, thanks rahul for your post it helped me a lot . !!!

Sounds good Rakesh… Thanks

I have tried below urls for job tracker but none of them worked out . Also i confirmed with network team they confirmed that there is no firewall blocking .

http://ipaddress:50030/

http://localhost:50030/

http://servername:50030/

Core xml file for reference

fs.default.name

hdfs://hadoop-01:9000/

dfs.permissions

false

Hi Rakesh,

TecAdmin.net commnets does not support HTML tags properly. Can you please show the screenshot of your file..

Hi Rahul,

sorry for my delay response.

I was able to resolve the above error ,By mistake i deleted the wrapper in the xml which caused the error , I have now kept the right data in xml and found below output after format.

[hadoop@hadoop-01 hadoop]$ /opt/jdk1.7.0_40/bin/jps

2148 NameNode

2628 TaskTracker

2767 Jps

2503 JobTracker

2415 SecondaryNameNode

2274 DataNode

However in the xml files i have used localhost instead of my server ipaddress is that fine , because i am unable to launch the web urls for the same

Hi Rahul,

I am trying to install a 3 node Hadoop Cluster and using v2.1.0-beta version. Though I could sense there are a lot of changes in terems of directory structure (as compared to v1.2.1) but finally after configuring most of the steps mentioned here , when I issued the command “$JAVA_HOME/bin/jps” on my master server I am seeing O/P:

16467 Resource Manager

15966 NameNode

16960 Jps

16255 SecondaryNameNode

But I cant’t see my DataNode or NodeManager getting started.

I can send you the ouptut of “start-all.sh ” script output (which throws a lot of error lines but somehow able to start the above mentioned services), if you think that can be of any help to you.

Secondly I am not able open any pages as mentioned part of web services; e.g.

http://:50030/ for the Jobtracker -> Resource Manager

http://:50070/ for the Namenode

Would appreciate any comment from your side on my queries here.

Hi Sudeep,

Yes, Please post the output of start-all.sh command with log files. But first plz empty your log files and them run start-all.sh after that post all outputs.

Also I prefer, if you post your question on our new forum, so it will be better to communicate.

Here is the output of start-all.sh script.

[hadoop@hadoop-master sbin]$ ./start-all.sh

This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh

13/10/20 18:45:47 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform… using builtin-java classes where applicable

Starting namenodes on [Java HotSpot(TM) 64-Bit Server VM warning: You have loaded library /opt/hadoop/hadoop/lib/native/libhadoop.so.1.0.0 which might have disabled stack guard. The VM will try to fix the stack guard now.

It’s highly recommended that you fix the library with ‘execstack -c ‘, or link it with ‘-z noexecstack’.

hadoop-master]

sed: -e expression #1, char 6: unknown option to `s’

Java: ssh: Could not resolve hostname Java: Temporary failure in name resolution

64-Bit: ssh: Could not resolve hostname 64-Bit: Temporary failure in name resolution

HotSpot(TM): ssh: Could not resolve hostname HotSpot(TM): Temporary failure in name resolution

Server: ssh: Could not resolve hostname Server: Temporary failure in name resolution

VM: ssh: Could not resolve hostname VM: Temporary failure in name resolution

warning:: ssh: Could not resolve hostname warning:: Temporary failure in name resolution

You: ssh: Could not resolve hostname You: Temporary failure in name resolution

have: ssh: Could not resolve hostname have: Temporary failure in name resolution

library: ssh: Could not resolve hostname library: Temporary failure in name resolution

have: ssh: Could not resolve hostname have: Temporary failure in name resolution

which: ssh: Could not resolve hostname which: Temporary failure in name resolution

disabled: ssh: Could not resolve hostname disabled: Temporary failure in name resolution

will: ssh: Could not resolve hostname will: Temporary failure in name resolution

stack: ssh: Could not resolve hostname stack: Temporary failure in name resolution

guard.: ssh: Could not resolve hostname guard.: Temporary failure in name resolution

might: ssh: Could not resolve hostname might: Temporary failure in name resolution

stack: ssh: Could not resolve hostname stack: Temporary failure in name resolution

the: ssh: Could not resolve hostname the: Temporary failure in name resolution

loaded: ssh: Could not resolve hostname loaded: Temporary failure in name resolution

VM: ssh: Could not resolve hostname VM: Temporary failure in name resolution

‘execstack: ssh: Could not resolve hostname ‘execstack: Temporary failure in name resolution

to: ssh: Could not resolve hostname to: Temporary failure in name resolution

that: ssh: Could not resolve hostname that: Temporary failure in name resolution

try: ssh: Could not resolve hostname try: Temporary failure in name resolution

highly: ssh: Could not resolve hostname highly: Temporary failure in name resolution

link: ssh: Could not resolve hostname link: Temporary failure in name resolution

fix: ssh: Could not resolve hostname fix: Temporary failure in name resolution

you: ssh: Could not resolve hostname you: Temporary failure in name resolution

guard: ssh: Could not resolve hostname guard: Temporary failure in name resolution

fix: ssh: Could not resolve hostname fix: Temporary failure in name resolution

or: ssh: Could not resolve hostname or: Temporary failure in name resolution

It’s: ssh: Could not resolve hostname It’s: Temporary failure in name resolution

recommended: ssh: Could not resolve hostname recommended: Temporary failure in name resolution

the: ssh: Could not resolve hostname the: Temporary failure in name resolution

-c: Unknown cipher type ‘cd’

‘-z: ssh: Could not resolve hostname ‘-z: Temporary failure in name resolution

with: ssh: Could not resolve hostname with: Temporary failure in name resolution

with: ssh: Could not resolve hostname with: Temporary failure in name resolution

now.: ssh: Could not resolve hostname now.: Temporary failure in name resolution

noexecstack’.: ssh: Could not resolve hostname noexecstack’.: Temporary failure in name resolution

‘,: ssh: Could not resolve hostname ‘,: Temporary failure in name resolution

The: ssh: Could not resolve hostname The: Temporary failure in name resolution

it: ssh: Could not resolve hostname it: Temporary failure in name resolution

library: ssh: Could not resolve hostname library: Temporary failure in name resolution

hadoop-master: starting namenode, logging to /opt/hadoop/hadoop/logs/hadoop-hadoop-namenode-hadoop-master.out

hadoop-master: Java HotSpot(TM) 64-Bit Server VM warning: You have loaded library /opt/hadoop/hadoop/lib/native/libhadoop.so.1.0.0 which might have disabled stack guard. The VM will try to fix the stack guard now.

hadoop-master: It’s highly recommended that you fix the library with ‘execstack -c ‘, or link it with ‘-z noexecstack’.

hadoop-slave-1: bash: line 0: cd: /opt/hadoop/hadoop: No such file or directory

hadoop-slave-1: bash: /opt/hadoop/hadoop/sbin/hadoop-daemon.sh: No such file or directory

hadoop-slave-2: bash: line 0: cd: /opt/hadoop/hadoop: No such file or directory

hadoop-slave-2: bash: /opt/hadoop/hadoop/sbin/hadoop-daemon.sh: No such file or directory

Starting secondary namenodes [Java HotSpot(TM) 64-Bit Server VM warning: You have loaded library /opt/hadoop/hadoop/lib/native/libhadoop.so.1.0.0 which might have disabled stack guard. The VM will try to fix the stack guard now.

It’s highly recommended that you fix the library with ‘execstack -c ‘, or link it with ‘-z noexecstack’.

0.0.0.0]

sed: -e expression #1, char 6: unknown option to `s’

64-Bit: ssh: Could not resolve hostname 64-Bit: Temporary failure in name resolution

Java: ssh: Could not resolve hostname Java: Temporary failure in name resolution

Server: ssh: Could not resolve hostname Server: Temporary failure in name resolution

HotSpot(TM): ssh: Could not resolve hostname HotSpot(TM): Temporary failure in name resolution

VM: ssh: Could not resolve hostname VM: Temporary failure in name resolution

warning:: ssh: Could not resolve hostname warning:: Temporary failure in name resolution

have: ssh: Could not resolve hostname have: Temporary failure in name resolution

You: ssh: Could not resolve hostname You: Temporary failure in name resolution

library: ssh: Could not resolve hostname library: Temporary failure in name resolution

might: ssh: Could not resolve hostname might: Temporary failure in name resolution

loaded: ssh: Could not resolve hostname loaded: Temporary failure in name resolution

try: ssh: Could not resolve hostname try: Temporary failure in name resolution

guard.: ssh: Could not resolve hostname guard.: Temporary failure in name resolution

stack: ssh: Could not resolve hostname stack: Temporary failure in name resolution

which: ssh: Could not resolve hostname which: Temporary failure in name resolution

have: ssh: Could not resolve hostname have: Temporary failure in name resolution

now.: ssh: Could not resolve hostname now.: Temporary failure in name resolution

stack: ssh: Could not resolve hostname stack: Temporary failure in name resolution

The: ssh: Could not resolve hostname The: Temporary failure in name resolution

guard: ssh: Could not resolve hostname guard: Temporary failure in name resolution

will: ssh: Could not resolve hostname will: Temporary failure in name resolution

the: ssh: Could not resolve hostname the: Temporary failure in name resolution

recommended: ssh: Could not resolve hostname recommended: Temporary failure in name resolution

disabled: ssh: Could not resolve hostname disabled: Temporary failure in name resolution

VM: ssh: Could not resolve hostname VM: Temporary failure in name resolution

to: ssh: Could not resolve hostname to: Temporary failure in name resolution

‘execstack: ssh: Could not resolve hostname ‘execstack: Temporary failure in name resolution

highly: ssh: Could not resolve hostname highly: Temporary failure in name resolution

‘,: ssh: Could not resolve hostname ‘,: Temporary failure in name resolution

that: ssh: Could not resolve hostname that: Temporary failure in name resolution

-c: Unknown cipher type ‘cd’

‘-z: ssh: Could not resolve hostname ‘-z: Temporary failure in name resolution

link: ssh: Could not resolve hostname link: Temporary failure in name resolution

fix: ssh: Could not resolve hostname fix: Temporary failure in name resolution

or: ssh: Could not resolve hostname or: Temporary failure in name resolution

library: ssh: Could not resolve hostname library: Temporary failure in name resolution

it: ssh: Could not resolve hostname it: Temporary failure in name resolution

you: ssh: Could not resolve hostname you: Temporary failure in name resolution

It’s: ssh: Could not resolve hostname It’s: Temporary failure in name resolution

with: ssh: Could not resolve hostname with: Temporary failure in name resolution

with: ssh: Could not resolve hostname with: Temporary failure in name resolution

fix: ssh: Could not resolve hostname fix: Temporary failure in name resolution

the: ssh: Could not resolve hostname the: Temporary failure in name resolution

noexecstack’.: ssh: Could not resolve hostname noexecstack’.: Temporary failure in name resolution

0.0.0.0: starting secondarynamenode, logging to /opt/hadoop/hadoop/logs/hadoop-hadoop-secondarynamenode-hadoop-master.out

0.0.0.0: Java HotSpot(TM) 64-Bit Server VM warning: You have loaded library /opt/hadoop/hadoop/lib/native/libhadoop.so.1.0.0 which might have disabled stack guard. The VM will try to fix the stack guard now.

0.0.0.0: It’s highly recommended that you fix the library with ‘execstack -c ‘, or link it with ‘-z noexecstack’.

13/10/20 18:45:59 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform… using builtin-java classes where applicable

starting yarn daemons

starting resourcemanager, logging to /opt/hadoop/hadoop/logs/yarn-hadoop-resourcemanager-hadoop-master.out

hadoop-slave-1: bash: line 0: cd: /opt/hadoop/hadoop: No such file or directory

hadoop-slave-1: bash: /opt/hadoop/hadoop/sbin/yarn-daemon.sh: No such file or directory

hadoop-slave-2: bash: line 0: cd: /opt/hadoop/hadoop: No such file or directory

hadoop-slave-2: bash: /opt/hadoop/hadoop/sbin/yarn-daemon.sh: No such file or directory

[hadoop@hadoop-master sbin]$ $JAVA_HOME/bin/jps

15130 Jps

14697 SecondaryNameNode

14872 ResourceManager

14408 NameNode

[hadoop@hadoop-master sbin]$

hello..

i also faced the same issue as mr Rakesh

while executing the format command

[hadoop@localhost hadoop]$ bin/hadoop namenode -format

15/06/24 18:32:24 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = localhost.localdomain/127.0.0.1

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 1.2.1

STARTUP_MSG: build = https://svn.apache.org/repos/asf/hadoop/common/branches/branch-1.2 -r 1503152; compiled by ‘mattf’ on Mon Jul 22 15:23:09 PDT 2013

STARTUP_MSG: java = 1.7.0_79

************************************************************/

[Fatal Error] core-site.xml:1:21: The processing instruction target matching “[xX][mM][lL]” is not allowed.

15/06/24 18:32:25 FATAL conf.Configuration: error parsing conf file: org.xml.sax.SAXParseException; systemId: file:/opt/hadoop/hadoop/conf/core-site.xml; lineNumber: 1; columnNumber: 21; The processing instruction target matching “[xX][mM][lL]” is not allowed.

15/06/24 18:32:25 ERROR namenode.NameNode: java.lang.RuntimeException: org.xml.sax.SAXParseException; systemId: file:/opt/hadoop/hadoop/conf/core-site.xml; lineNumber: 1; columnNumber: 21; The processing instruction target matching “[xX][mM][lL]” is not allowed.

at org.apache.hadoop.conf.Configuration.loadResource(Configuration.java:1249)

at org.apache.hadoop.conf.Configuration.loadResources(Configuration.java:1107)

at org.apache.hadoop.conf.Configuration.getProps(Configuration.java:1053)

at org.apache.hadoop.conf.Configuration.set(Configuration.java:420)

at org.apache.hadoop.hdfs.server.namenode.NameNode.setStartupOption(NameNode.java:1374)

at org.apache.hadoop.hdfs.server.namenode.NameNode.createNameNode(NameNode.java:1463)

at org.apache.hadoop.hdfs.server.namenode.NameNode.main(NameNode.java:1488)

Caused by: org.xml.sax.SAXParseException; systemId: file:/opt/hadoop/hadoop/conf/core-site.xml; lineNumber: 1; columnNumber: 21; The processing instruction target matching “[xX][mM][lL]” is not allowed.

at com.sun.org.apache.xerces.internal.parsers.DOMParser.parse(DOMParser.java:257)

at com.sun.org.apache.xerces.internal.jaxp.DocumentBuilderImpl.parse(DocumentBuilderImpl.java:347)

at javax.xml.parsers.DocumentBuilder.parse(DocumentBuilder.java:177)

at org.apache.hadoop.conf.Configuration.loadResource(Configuration.java:1156)

… 6 more

15/06/24 18:32:25 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at localhost.localdomain/127.0.0.1

please help me solve the issue

thanks in advance

Hi Lini,

Please check core-site.xml file. It looks this file is some syntax issue.

delete the line from the top of the core-site.xml file

Hi

I ran the format step and got below error message

bin/hadoop namenode -format

13/10/09 15:33:33 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = hadoop-01/10.49.14.42

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 1.2.1

STARTUP_MSG: build = https://svn.apache.org/repos/asf/hadoop/common/branches/branch-1.2 -r 1503152; compiled by ‘mattf’ on Mon Jul 22 15:23:09 PDT 2013

STARTUP_MSG: java = 1.6.0_20

************************************************************/

[Fatal Error] core-site.xml:5:2: The markup in the document following the root element must be well-formed.

13/10/09 15:33:33 FATAL conf.Configuration: error parsing conf file: org.xml.sax.SAXParseException; systemId: file:/opt/hadoop/hadoop/conf/core-site.xml; lineNumber: 5; columnNumber: 2; The markup in the document following the root element must be well-formed.

13/10/09 15:33:33 ERROR namenode.NameNode: java.lang.RuntimeException: org.xml.sax.SAXParseException; systemId: file:/opt/hadoop/hadoop/conf/core-site.xml; lineNumber: 5; columnNumber: 2; The markup in the document following the root element must be well-formed.

at org.apache.hadoop.conf.Configuration.loadResource(Configuration.java:1249)

at org.apache.hadoop.conf.Configuration.loadResources(Configuration.java:1107)

at org.apache.hadoop.conf.Configuration.getProps(Configuration.java:1053)

at org.apache.hadoop.conf.Configuration.set(Configuration.java:420)

at org.apache.hadoop.hdfs.server.namenode.NameNode.setStartupOption(NameNode.java:1374)

at org.apache.hadoop.hdfs.server.namenode.NameNode.createNameNode(NameNode.java:1463)

at org.apache.hadoop.hdfs.server.namenode.NameNode.main(NameNode.java:1488)

Caused by: org.xml.sax.SAXParseException; systemId: file:/opt/hadoop/hadoop/conf/core-site.xml; lineNumber: 5; columnNumber: 2; The markup in the document following the root element must be well-formed.

at com.sun.org.apache.xerces.internal.parsers.DOMParser.parse(DOMParser.java:253)

at com.sun.org.apache.xerces.internal.jaxp.DocumentBuilderImpl.parse(DocumentBuilderImpl.java:288)

at javax.xml.parsers.DocumentBuilder.parse(DocumentBuilder.java:177)

at org.apache.hadoop.conf.Configuration.loadResource(Configuration.java:1156)

… 6 more

13/10/09 15:33:33 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at hadoop-01/10.49.14.42

************************************************************/

Hi,

I have successfully installed hadoop cluster, and it seems to run fine. I was able to access the webservices after changing the “localhost” to the host’s ip address. However, when clicking the browse file system in namenode webaccess, Browser does not find the webpage. the url shows as “http://master:50075/browseDirectory.jsp?namenodeInfoPort=50070&dir=/”

(I get the same error at a few other places, but manually replacing the master with ip address seems to solve the issue)

“master” is the hostname I chose for this machine. My guess is this is a DNS issue, but I was wondering if we can change any config files so the links resolve to an ip address instead of master.

I also see that your mapred-site.xml and core-site.xml have “hdfs://localhost:port_num” in them, but you are able to access the web interface using your domain name (in the screenshots you provided). I am installing hadoop on remote system, and had to replace the localhost with the server’s ip address to access the web interface. Is this accepted practice? Or should I leave them as localhost and make changes elsewhere?

Third, for hdfs-site.xml, the only property I defined is the replication value ( I was following a separate tutorial until about halfway). So I was wondering if you could explain what leaving the dfs.data.dir and dfs.name.dir would do? If we do define those values, Can it be any directory, or does it have to be inside the hadoop installation?

And last,

when I do get to the file browser ( by manually replacing “master” in the url with the ip address), I see a tmp directory there as well that contains the mapred folder and it’s subfolders . Is this normal?

Sincerely,

Ashutosh

Clarification:

By “I was able to access the web services after changing the “localhost” to the host’s ip address”, I meant changed the “localhost” in the hadoop/conf/*.xml files to a static ip address that I am now using to access the web services.

Hello,

Rahul I have a question, why the step 7 does not work in my cluster ?

Hi Rodrigo,

What issue are you getting ?

I can not open the URL of hadoop in my cluster.

where was configured the URL ?

A lot of factors play into the url’s not showing the result. If you are on a local environment ( working on the same machine that you are installing the cluster on) then this tutorial should have worked.

To the best of my knowledge, the “localhost” in

hdfs://localhost:9000/ and

localhost:9001

defined inside the core-site.xml and mapred-site.xml should be changed to the hostname of your system.

i.e. if the hostname of your system is “linuxuser”, then change the “localhost” to “linuxuser” in these line, then add the following line in /etc/hosts file:

127.0.0.1 linuxuser

If you are working on a remote server, then same as above except the host file should have the static ip instead of 127.0.0.1 , and you will be able to see the web services, but there is another problem I ran into. See my question below .

It could be the firewall setup as well. try disabling iptables to check if it is firewall issue. If you do this, make sure you re start iptables when you are done

hi when i run bin/start-all.sh i get a message saying

fatal error mapred-site.xml:7:1:XML document structures must start and end within same entity

and also the same message when i run

$ bin/hadoop jar hadoop-examples-*.jar grep input output ‘dfs[a-z.]+’

I did this in ubuntu vbox

[hadoop@girishniyer hadoop]$ bin/hadoop namenode -format

13/09/30 04:53:34 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = java.net.UnknownHostException: girishniyer: girishniyer

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 1.2.1

STARTUP_MSG: build = https://svn.apache.org/repos/asf/hadoop/common/branches/branch-1.2 -r 1503152; compiled by ‘mattf’ on Mon Jul 22 15:23:09 PDT 2013

STARTUP_MSG: java = 1.7.0_40

************************************************************/

13/09/30 04:53:34 INFO util.GSet: Computing capacity for map BlocksMap

13/09/30 04:53:34 INFO util.GSet: VM type = 64-bit

13/09/30 04:53:34 INFO util.GSet: 2.0% max memory = 932184064

13/09/30 04:53:34 INFO util.GSet: capacity = 2^21 = 2097152 entries

13/09/30 04:53:34 INFO util.GSet: recommended=2097152, actual=2097152

13/09/30 04:53:34 INFO namenode.FSNamesystem: fsOwner=hadoop

13/09/30 04:53:34 INFO namenode.FSNamesystem: supergroup=supergroup

13/09/30 04:53:34 INFO namenode.FSNamesystem: isPermissionEnabled=true

13/09/30 04:53:34 INFO namenode.FSNamesystem: dfs.block.invalidate.limit=100

13/09/30 04:53:34 INFO namenode.FSNamesystem: isAccessTokenEnabled=false accessKeyUpdateInterval=0 min(s), accessTokenLifetime=0 min(s)

13/09/30 04:53:34 INFO namenode.FSEditLog: dfs.namenode.edits.toleration.length = 0

13/09/30 04:53:34 INFO namenode.NameNode: Caching file names occuring more than 10 times

13/09/30 04:53:34 ERROR namenode.NameNode: java.io.IOException: Cannot create directory /opt/hadoop/hadoop/dfs/name/current

at org.apache.hadoop.hdfs.server.common.Storage$StorageDirectory.clearDirectory(Storage.java:294)

at org.apache.hadoop.hdfs.server.namenode.FSImage.format(FSImage.java:1337)

at org.apache.hadoop.hdfs.server.namenode.FSImage.format(FSImage.java:1356)

at org.apache.hadoop.hdfs.server.namenode.NameNode.format(NameNode.java:1261)

at org.apache.hadoop.hdfs.server.namenode.NameNode.createNameNode(NameNode.java:1467)

at org.apache.hadoop.hdfs.server.namenode.NameNode.main(NameNode.java:1488)

13/09/30 04:53:34 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at java.net.UnknownHostException: girishniyer: girishniyer

************************************************************/

[hadoop@girishniyer hadoop]$

i configured java as per ur article.

then till formatting name node no issues

bt here iam stuck again.

could u please help me?

Hi Girish,

You systems hostname is “girishniyer” which is not resolving to any ip. Do a entry in /etc/hosts file like below

<system_ip> girishniyer

I just began learning hadoop. Your article was superbly simple and straight forward. The patience with which you helped Girish Iyer is commendable.

Thanks Girish,

Excellent article …. I could configure hadoop in 20 mins … do you have any more learning article for begineers like first wordcount program and all

when i type the following,i got the error as follows

[hadoop@girishniyer hadoop]$ bin/start-all.sh

also i cant able to format the name node.it says no such a directory…please help.

is it due to giving the java path ?

starting namenode, logging to /opt/hadoop/hadoop/libexec/../logs/hadoop-hadoop-namenode-girishniyer.out

/opt/hadoop/hadoop/libexec/../bin/hadoop: line 350: /usr/bin/java/jdk1.7.0_25/bin/java: Not a directory

/opt/hadoop/hadoop/libexec/../bin/hadoop: line 434: /usr/bin/java/jdk1.7.0_25/bin/java: Not a directory

/opt/hadoop/hadoop/libexec/../bin/hadoop: line 434: exec: /usr/bin/java/jdk1.7.0_25/bin/java: cannot execute: Not a directory

localhost: starting datanode, logging to /opt/hadoop/hadoop/libexec/../logs/hadoop-hadoop-datanode-girishniyer.out

localhost: /opt/hadoop/hadoop/libexec/../bin/hadoop: line 350: /usr/bin/java/jdk1.7.0_25/bin/java: Not a directory

localhost: /opt/hadoop/hadoop/libexec/../bin/hadoop: line 434: /usr/bin/java/jdk1.7.0_25/bin/java: Not a directory

localhost: /opt/hadoop/hadoop/libexec/../bin/hadoop: line 434: exec: /usr/bin/java/jdk1.7.0_25/bin/java: cannot execute: Not a directory

localhost: starting secondarynamenode, logging to /opt/hadoop/hadoop/libexec/../logs/hadoop-hadoop-secondarynamenode-girishniyer.out

localhost: /opt/hadoop/hadoop/libexec/../bin/hadoop: line 350: /usr/bin/java/jdk1.7.0_25/bin/java: Not a directory

localhost: /opt/hadoop/hadoop/libexec/../bin/hadoop: line 434: /usr/bin/java/jdk1.7.0_25/bin/java: Not a directory

localhost: /opt/hadoop/hadoop/libexec/../bin/hadoop: line 434: exec: /usr/bin/java/jdk1.7.0_25/bin/java: cannot execute: Not a directory

starting jobtracker, logging to /opt/hadoop/hadoop/libexec/../logs/hadoop-hadoop-jobtracker-girishniyer.out

/opt/hadoop/hadoop/libexec/../bin/hadoop: line 350: /usr/bin/java/jdk1.7.0_25/bin/java: Not a directory

/opt/hadoop/hadoop/libexec/../bin/hadoop: line 434: /usr/bin/java/jdk1.7.0_25/bin/java: Not a directory

/opt/hadoop/hadoop/libexec/../bin/hadoop: line 434: exec: /usr/bin/java/jdk1.7.0_25/bin/java: cannot execute: Not a directory

localhost: starting tasktracker, logging to /opt/hadoop/hadoop/libexec/../logs/hadoop-hadoop-tasktracker-girishniyer.out

localhost: /opt/hadoop/hadoop/libexec/../bin/hadoop: line 350: /usr/bin/java/jdk1.7.0_25/bin/java: Not a directory

localhost: /opt/hadoop/hadoop/libexec/../bin/hadoop: line 434: /usr/bin/java/jdk1.7.0_25/bin/java: Not a directory

localhost: /opt/hadoop/hadoop/libexec/../bin/hadoop: line 434: exec: /usr/bin/java/jdk1.7.0_25/bin/java: cannot execute: Not a directory

Hi Girish,

Did you configured JAVA_HOME correctly… ?

Check this article and make sure java is properly configured as in step 5.4 Edit hadoop-env.sh

http://tecadmin.net/steps-to-install-java-on-centos-5-6-or-rhel-5-6/

Thank you rahul for ur quick reply.

i configured java and export the java_home path as per ur blog

now when i give “bin/hadoop namenode -format”

the following is coming

bin/hadoop: line 350: /opt/jdk1.7.0_25/bin/java: No such file or directory

bin/hadoop: line 434: /opt/jdk1.7.0_25/bin/java: No such file or directory

bin/hadoop: line 434: exec: /opt/jdk1.7.0_25/bin/java:

cannot execute: No such file or directory

when i give which java command,my path displayed is as follows.

“/usr/bin/java”

when i typed java version,its as follows

[hadoop@girishniyer hadoop]$ java -version

java version “1.7.0_25”

OpenJDK Runtime Environment (rhel-2.3.10.4.el6_4-x86_64)

OpenJDK 64-Bit Server VM (build 23.7-b01, mixed mode)

i am little confused hence.

please help me…waiting for ur reply

thanks in advance

Hi Girish,

Step #5.4 still not configured properly. How did you installed Java on your system. Source or RPM ?

hi rahul,

java is there in centos when i installed the cent os

i reinstalled the centos6.4 again

nw the java version is as follows

”

[girishniyer@girishniyer Desktop]$ which java

/usr/bin/java

[girishniyer@girishniyer Desktop]$ java -version

java version “1.7.0_09-icedtea”

OpenJDK Runtime Environment (rhel-2.3.4.1.el6_3-x86_64)

OpenJDK 64-Bit Server VM (build 23.2-b09, mixed mode)

”

did i need to re install the java after removing this.

if so can u please help me

Hi Girish,

OpenJDK default installation path should be /usr/lib/jvm/java-<java version>-openjdk-<java version>.x86_64/. Please check, if this path exists add it in configuration

else do a fresh java install http://tecadmin.net/steps-to-install-java-on-centos-5-6-or-rhel-5-6/

Hello,

I had the same error, it was because my jave home was /opt/jdk1.7.0.25/ . There was no underscore in the jdk1.7.0_25. May be this helps you!

Good article !!

This article is really helpful and grear work…..Thank You.

I step 4,when i type,¨ tar -xzf hadoop-1.2.0.tar.gz

The following error is displaying..could u pleaSe help

tar (child): hadoop-1.2.0.tar.gz: Cannot open: No such file or directory

tar (child): Error is not recoverable: exiting now

tar: Child returned status 2

tar: Error is not recoverable: exiting now

Hi Girish,

Now hadoop 1.2.1 latest stable version is available. So downloaded archive name is changed.

We have updated article step 4. Kindly try now.

This is really great article for entry level person who like to see how hadoop looks like and play around. great job!

Also it would be great if you add how to stop hadoop at the end of your article.

Thank you once again.

Hi Raja,

Thanks for appreciation.

We have added last step ( Step 8 ) to how to stop all hadoop services.

How to add a new not to this cluster… ?

whoah this blog is fantastic i like reading your articles. Keep up the good paintings! You understand, a lot of people are hunting round for this info, you could aid them greatly.