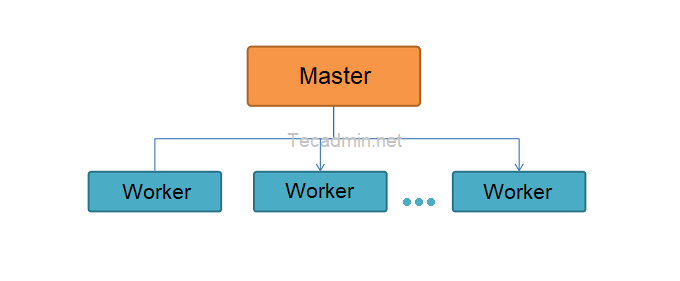

The master-worker model, sometimes referred to as the master-slave model, is a design pattern used primarily in parallel and distributed computing. This model is structured such that one primary process (the master) delegates tasks to one or more secondary processes (workers). Once tasks are assigned, each worker process is responsible for completing its task and then reporting back to the master with the results.

Key Characteristics:

- Decentralization of Work: The master does not perform the actual computation tasks but rather focuses on distributing the tasks and gathering results.

- Autonomy of Workers: Workers can perform tasks without further interaction with the master until it’s time to return the results.

- Fault Tolerance: Even if one or more workers fail, the computation can proceed, though it may be at a reduced speed or with increased redundancy.

Benefits:

- Load Balancing: The model can help evenly distribute work among different worker nodes, ensuring that each node is optimally utilized.

- Flexibility: New worker nodes can be added or removed as needed without significantly affecting the master node’s operations.

- Scalability: The system can be scaled up or down easily, depending on the workload, by simply increasing or decreasing the number of worker nodes.

- Efficiency: By parallelizing tasks, the master-worker model can achieve faster computation times for certain problems that are decomposable into smaller sub-tasks.

Limitations:

- Single Point of Failure: If the master fails, it can disrupt the entire system. However, this limitation can be mitigated through the use of backup or redundant master nodes.

- Potential Bottlenecks: The master can become a bottleneck if it cannot assign tasks or gather results quickly enough, especially in scenarios with a large number of worker nodes.

Example: Distributed Web Crawling

Imagine you’re designing a system to crawl the web and index websites. A master-worker model can be employed to achieve this efficiently.

- Master Node: The master node maintains a list of URLs to be crawled. It distributes these URLs to available worker nodes.

- Worker Nodes: Each worker node receives a list of URLs from the master. It then fetches each webpage, extracts content and other URLs linked from those pages. Once done, the worker sends back the extracted content and newly found URLs to the master.

- Result Aggregation: As workers return data, the master node aggregates the content and adds new URLs to its list for future crawling.

- Handling Failures: If a worker node fails or becomes unresponsive, the master can reassign its URLs to another worker, ensuring that system failures don’t result in missed content.

Through the master-worker model, the web crawling process can be highly parallelized, making it possible to index vast portions of the web quickly.

Conclusion

The master-worker model provides an efficient and scalable approach to parallel and distributed computing. By delegating tasks and aggregating results, it streamlines computational processes and optimizes resource usage. While it comes with its challenges, such as the potential for master node bottlenecks, its benefits in scalability and flexibility often outweigh the downsides, making it a popular choice for many distributed computing applications.