Apache Kafka is an open-source, distributed event streaming platform developed by the Apache Software Foundation. This is written in Scala and Java programming languages. You can install Kafka on any platform supporting Java.

This tutorial described you step-by-step tutorial to install Apache Kafka on Ubuntu 20.04 LTS Linux system. You will also learn to create topics in Kafka and run producer and consumer nodes.

Prerequisites

You must have sudo privileged account access to the Ubuntu 20.04 Linux system.

Step 1 – Installing Java

Apache Kafka can be run on all platforms supported by Java. In order to set up Kafka on the Ubuntu system, you need to install java first. As we know, Oracle java is now commercially available, So we are using its open-source version OpenJDK.

Execute the below command to install OpenJDK on your system from the official PPA’s.

sudo apt update sudo apt install default-jdk

Verify the current active Java version.

java --version

openjdk version "11.0.9.1" 2020-11-04

OpenJDK Runtime Environment (build 11.0.9.1+1-Ubuntu-0ubuntu1.20.04)

OpenJDK 64-Bit Server VM (build 11.0.9.1+1-Ubuntu-0ubuntu1.20.04, mixed mode, sharing)

Step 2 – Download Latest Apache Kafka

Download the Apache Kafka binary files from its official download website. You can also select any nearby mirror to download.

wget https://dlcdn.apache.org/kafka/3.2.0/kafka_2.13-3.2.0.tgz

Then extract the archive file

tar xzf kafka_2.13-3.2.0.tgzsudo mv kafka_2.13-3.2.0 /usr/local/kafka

Step 3 – Creating Systemd Unit Files

Now, you need to create systemd unit files for the Zookeeper and Kafka services. Which will help you to start/stop the Kafka service in an easy way.

First, create a systemd unit file for Zookeeper:

vim /etc/systemd/system/zookeeper.service

And add the following content:

[Unit] Description=Apache Zookeeper server Documentation=http://zookeeper.apache.org Requires=network.target remote-fs.target After=network.target remote-fs.target [Service] Type=simple ExecStart=/usr/local/kafka/bin/zookeeper-server-start.sh /usr/local/kafka/config/zookeeper.properties ExecStop=/usr/local/kafka/bin/zookeeper-server-stop.sh Restart=on-abnormal [Install] WantedBy=multi-user.target

Save the file and close it.

Next, to create a systemd unit file for the Kafka service:

vim /etc/systemd/system/kafka.service

Add the below content. Make sure to set the correct JAVA_HOME path as per the Java installed on your system.

[Unit] Description=Apache Kafka Server Documentation=http://kafka.apache.org/documentation.html Requires=zookeeper.service [Service] Type=simple Environment="JAVA_HOME=/usr/lib/jvm/java-1.11.0-openjdk-amd64" ExecStart=/usr/local/kafka/bin/kafka-server-start.sh /usr/local/kafka/config/server.properties ExecStop=/usr/local/kafka/bin/kafka-server-stop.sh [Install] WantedBy=multi-user.target

Save the file and close.

Reload the systemd daemon to apply new changes.

systemctl daemon-reload

Step 4 – Start Kafka and Zookeeper Service

First, you need to start the ZooKeeper service and then start Kafka. Use the systemctl command to start a single-node ZooKeeper instance.

sudo systemctl start zookeeper

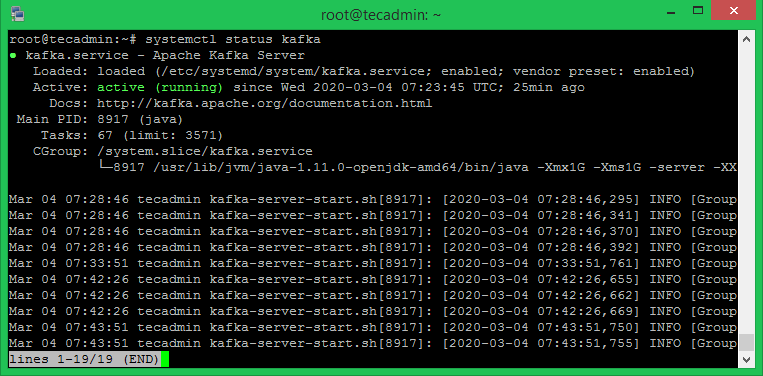

Now start the Kafka server and view the running status:

sudo systemctl start kafka sudo systemctl status kafka

All done. The Kafka installation has been successfully completed. The part of this tutorial will help you to work with the Kafka server.

Step 5 – Create a Topic in Kafka

Kafka provides multiple pre-built shell scripts to work on it. First, create a topic named “testTopic” with a single partition with a single replica:

cd /usr/local/kafka bin/kafka-topics.sh --create --bootstrap-server localhost:9092 --replication-factor 1 --partitions 1 --topic testTopic Created topic testTopic.

The replication factor describes how many copies of data will be created. As we are running with a single instance keep this value 1.

Set the partition options as the number of brokers you want your data to be split between. As we are running with a single broker keep this value 1.

You can create multiple topics by running the same command as above. After that, you can see the created topics on Kafka by the running below command:

bin/kafka-topics.sh --list --bootstrap-server localhost:9092 [output] testTopic

Alternatively, instead of manually creating topics you can also configure your brokers to auto-create topics when a non-existent topic is published to.

Step 6 – Send and Receive Messages in Kafka

The “producer” is the process responsible for put data into our Kafka. The Kafka comes with a command-line client that will take input from a file or from standard input and send it out as messages to the Kafka cluster. The default Kafka sends each line as a separate message.

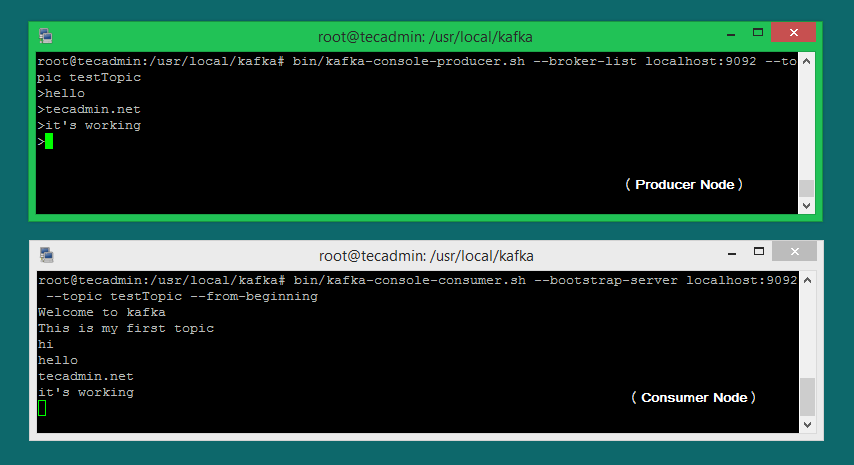

Let’s run the producer and then type a few messages into the console to send to the server.

bin/kafka-console-producer.sh --broker-list localhost:9092 --topic testTopic >Welcome to kafka >This is my first topic >

You can exit this command or keep this terminal running for further testing. Now open a new terminal to the Kafka consumer process on the next step.

Step 7 – Using Kafka Consumer

Kafka also has a command-line consumer to read data from the Kafka cluster and display messages to standard output.

bin/kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic testTopic --from-beginning Welcome to kafka This is my first topic

Now, If you have still running Kafka producer (Step #6) in another terminal. Just type some text on that producer terminal. it will immediately be visible on the consumer terminal. See the below screenshot of the Kafka producer and consumer in working:

Conclusion

This tutorial helped you to install and configure the Apache Kafka service on an Ubuntu system. Additionally, you learned to create a new topic in the Kafka server and run a sample production and consumer process with Apache Kafka.

6 Comments

Good guide. One correction in step 5, to get the list of created topics the arguments should be “–bootstrap-server” instead of ” –zookeeper”

I installed Kafka on WSL Ubuntu distro 20.04, Systemd doesn’t work. There was a tutorial that I used when I first installed it which bypassed Systemd, and it worked. However, I lost the link to the tutorial, and can’t run kafka again.

I’ll appreciate your help.

i have issue with systemctl this is not working in docker container

Just what I was looking for.

Thanks for sharing.

Thank You

Crystal clear, worked in first attempt!

Thanks for sharing!