HDFS is the Hadoop Distributed File System. It’s a distributed storage system for large data sets which supports fault tolerance, high throughput, and scalability. It works by dividing data into blocks that are replicated across multiple machines in a cluster. The blocks can be written to or read from in parallel, facilitating high throughput and fault tolerance. HDFS provides RAID-like redundancy with automatic failover. HDFS also supports compression, replication, and encryption.

The most common use case for HDFS is storing large collections of data such as image and video files, logs, sensor data, and so on.

Creating Directory Structure with HDFS

The “hdfs” command line utility is available under ${HADOOP_HOME}/bin directory. Assuming that the Hadoop bin directory is already included in PATH environment variable. Now log in as a HADOOP user and follow the instructions.

- Create a /data directory in HDFS file system. I am willing to use this directory to contain all the data of the applications.

hdfs dfs -mkdir /data - Creating another directory /var/log, that will contains all the log files. As the /var directory also not exists, use

-pto create a parent directory as well.hdfs dfs -mkdir -p /var/log - You can also use variables during directory creation. For example, creating a directory with the same name as the currently logged user. This directory can be used to contain the user’s data.

hdfs dfs -mkdir -p /Users/$USER

Changing File Permissions with HDFS

You can also change the files ownerships as well as permission in the HDFS file system.

- To change the file owner and group owner use the

-chowncommand line option:hdfs dfs -chown -R $HADOOP_USER:$HADOOP_USER /Users/hadoop - To change the file permission use the

-chmodcommand line options.hdfs dfs -chmod -R 775 /Users/hadoop

Copying Files to HDFS

The hdfs command provides -get and -put parameters to copy files to/from the HDFS file system.

- For example, to copy a single file from local to HDFS file system:

hdfs dfs -put ~/testfile.txt /var/log/ - Copy multiple files as are directory tree using the wildcard characters.

hdfs dfs -put ~/log/* /var/log/

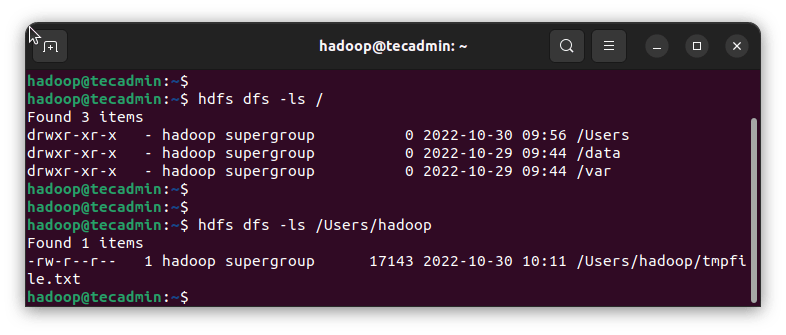

Listing Files in HDFS

While working with the Hadoop cluster, you can view files under the HDFS file system via the command line as well as GUI.

- Use the

-lsoption with hdfs to list files in the HDFS file system. For example to list all files on the root directory use:hdfs dfs -ls / - The same command can be used to list files from subdirectories as well.

hdfs dfs -ls /Users/hadoopYou should get the following output:

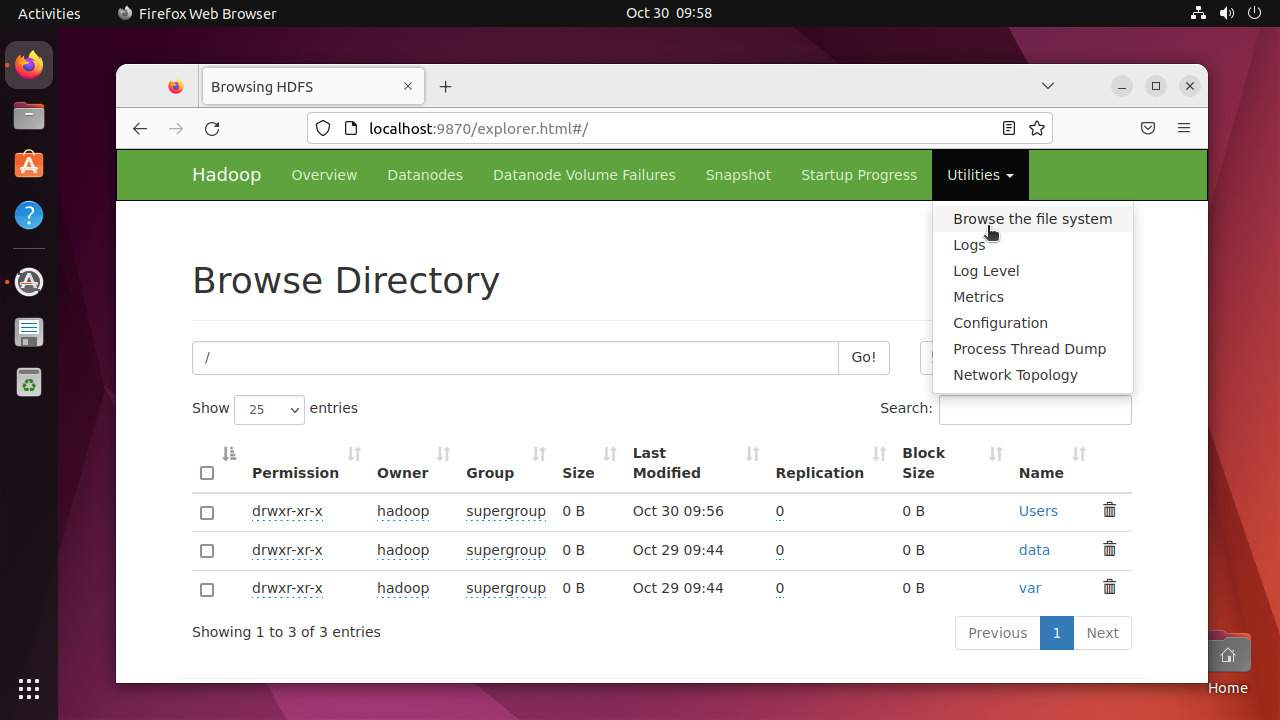

List files in HDFS - Rather than the command line, Hadoop also provides a graphical explorer to view, download and upload files easily. Browse the HDFS file system on the NameNode port at the following URL:

http://localhost:9870/explorer.html

Browse files in HDFS

Conclusion

HDFS also supports a range of other applications such as MapReduce jobs processing large volumes of data as well as user authentication and access control mechanisms. HDFS can also be combined with other distributed file systems like S3 and Swift to create hybrid cloud solutions that combine high availability and low latency with low-cost storage.

In this article, you have learned about creating a directory structure in the HDFS file system, changing permissions, and copying and listing files with HDFS.